Source: Intel

Intel has received “lots” of requests for custom-designed chips and software that work specifically with these chips, as data centres look to run faster, cheaper and greener while crunching and storing more data in future.

More such customers are asking for custom server processors, said Ron Kasabian, Intel’s general manager for Big Data solutions, while others have looked to streamline their data centre operations with software that is optimised to run with Intel hardware, such as its own version of Hadoop.

The plan, he added, was still to create software that run well with Intel’s server chips, such as its Xeon processors, before attempting to manufacture custom-designed chips for some of the biggest data centres of the world.

He stopped short of saying how many customers had asked for these chips, but added that the cost involved would have to be beneficial to both Intel and its customers.

Last month, the company surprised many by saying it was making custom chips for eBay and Facebook, which run huge data crunching and storage operations that serve millions of users a day.

Its business had always been centred on the mass production of identical chips, compared to smaller rivals such as AMD and even Arm, which have been making inroads with custom chips.

The market leader in server chips by far, Intel also surprised many market watchers recently by creating its own version of Hadoop, open source software that is commonly used to manage many big data centres.

Optimised for Intel hardware, this gives “assurance” to big customers that are not sure about trusting their operations to small Hadoop players in the market, said Kasabian.

Ultimately, the end game is still to sell Intel silicon, he told Techgoondu, at an event here in Ho Chi Minh City in Vietnam aimed at showing off the chipmaker’s vision of the data centre of the future.

The company knows it is in new territory as it attempts to win over customers looking to crunch and store ever more data in future.

Many such big customers are asking to “strip down” server components to a minimum to cut down power usage, while having specific software instructions – similar to ones used in PC chips for many years – to perform particular tasks such as recognising faces in a series of videos.

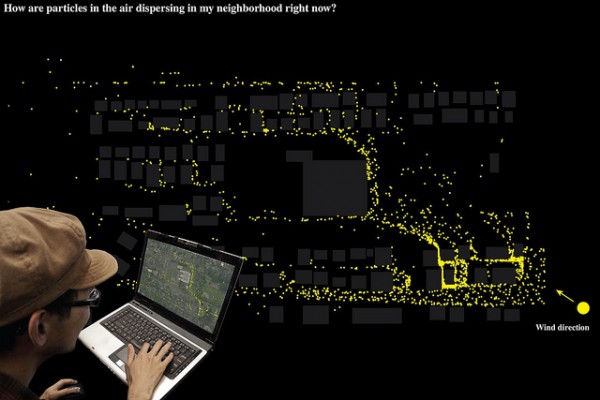

Besides the Facebooks and Googles of the world, more corporations and governments worldwide expect to better analyse and use petabytes of data collected everywhere from cellphones to environment sensors to predict future trends.

They may want to identify patterns, like how likely a request to connect to a server is coming from a potential hacker, or which products and services that customers will most likely buy in future, to better target sales efforts.

Intel, for example, says that it has generated a US$5 million increase in sales in Asia in the first quarter this year, by collecting customer sentiment gleaned from public feedback channels and telling its sales teams what to cross- or up-sell.

The huge amount of data collected can also be used by the public directly. In Vietnam, Intel has teamed up with the authorities in Danang city to build a green data centre, using Intel’s Data Center Manager and other technologies.

This will provide 135 e-government applications as well as process the data from sensors embedded in roads, highways and buses for a smart city project. Video screens and mobile apps will show commuters when buses will arrive and even alert them of how many passengers will be on the vehicle when it arrives, according to Intel.

For now, the adoption of such Big Data-enabled analytics in Asia is still six to 12 months behind the United States, Kasabian noted, with the exception of China where big multi-nationals appear to be taking the lead.

However, the chipmaker expects the takeup to accelerate in the region, as the company sells its vision of future data centres through proof-of concept projects. These include potential customers such as the Taiwan Stock Exchange, which has been evaluating Intel’s server technology for its data centre.