When I saw the Meta 2 promotional video that featured Nike designers donning the glasses, grabbing thin air and manipulating the conceptual shoe projected onto the space in front of them like holograms, I thought to myself: Cool, quite the asset when it becomes reality in the next decade.

I was wrong. The Metavision Meta 2 developer kit can be pre-ordered now for US$949, and, thanks to the company’s partnership with Dell, is being piloted with actual Nike designers. In fact, Metavision’s reps at Dell EMC World this week quipped that a meeting will take place next week.

Still, who would blame me for thinking the technology isn’t ready for prime time? In the same promo video, designers make design changes on the fly with pen and totem on the Dell Canvas and share the work with teammates, with all changes displayed real-time in 3D right above their screens.

Welcome to the not-so-distant future of virtual reality (VR), augmented reality (AR) and its lucrative commercial applications.

Virtual reality transports its users into another world, such as a crime scene in the distant future. Augmented reality lays elements onto an existing reality to make it more meaningful, such as scattering an empty physical room with virtual evidence to turn it into a crime scene.

For a long time, VR and AR have been limited to the arenas of entertainment and gaming, but this is set to change.

Industrial and healthcare applications will make up over half of all use cases of these immersive technologies by 2020 as commercial applications are “gamified” for them, according to Dell’s director of commercial VR and AR Gary Radburn.

Quoting a 2015 study by ABI Research for these figures, he highlighted that in contrast, gaming will make up just 8 per cent of all use cases by then, and media and entertainment a distant 3 per cent.

Dell is taking a partnership approach to entering the immersive technology field, working with firms they deem are providing viable complementary VR and AR kit to their own wares, and selling them as a business solution to its clients.

The Metavision Meta 2 is an example of such a collaboration. The hardware and software of Meta 2 are basically the work of Metavision. They are just as hands-on with those meetings with Nike.

Dell lends its marketing and sales channel firepower to push out these glasses with their Precision workstations (and maybe the Canvas) to customers large and small that Metavision (and by extension, Dell) probably could not have reached alone.

The future is here

What strikes me about the Meta 2 is how incredibly comfortable it is to wear. Yes, it still looks like a huge block of matter, runs an (adjustable) strap over the top of your head and has cushioned sides.

At the back, however, a clicking wheel provides granular control over exactly how much fit you need for the device to fit the shape of your skull, while the strap on the top of the head handles the slack.

The end result is amazing. The Meta 2 does not feel like it is resting all its weight on my nose like many other immersive headgear.

For the first time, I feel like I am wearing a device that I would not mind having on all day, which can only be good for potential industrial designers donning these kits.

Neither does it droop and show you just half of the things you want to see unless if you push it up with a free hand, which can happen when the mechanism to keep the glasses in place is not doing its job well enough.

The showfloor at Dell EMC World also featured demos powered by Microsoft HoloLens (including one used to demo features of a BMW sports vehicle – cool), but those were such a world of pain to wear compared to the lighter and well-fitting Meta 2.

The Meta 2 also had a much wider field of vision, four times larger than rivals if the reps from Metavision were to be believed.

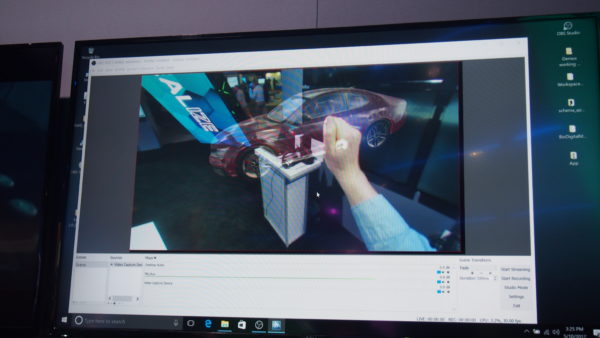

Indeed, the render car was able to occupy anywhere within my field of vision, as compared to the small square of a display area during a RSA hologram demo powered by HoloLens.

Of course, the Meta 2 is a tethered product that must be connected to a computer to handle its graphical rendering unlike the HoloLens, but that makes sense for the commercial design and realistic training applications that the Meta 2 will be used for.

One thing that I really liked about these AR glasses too, are that since there is no need to obscure its user’s entire field of vision to present an alternate reality, one can keep his or her spectacles on while using these devices. The ball is in your court now, VR gear makers.

Intuitive

The whole solution is powered by a twin set of screens with 2,560 x 1,440 resolution, a 720p front camera and an array of sensors to track the user’s position and hands. It also comes with four speakers near the ears of the wearer for audio output.

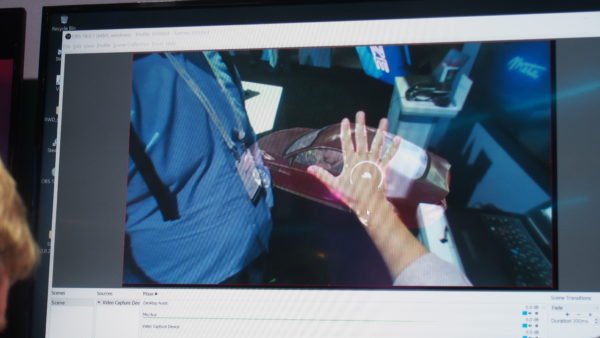

You simply have to hold your hand at a spot long enough for the sensors to detect it, then you are ready to interact with the projected hologram. A white wheel will fill itself up as it detects your hand, and turn into a blip once it recognises the limb as a controller.

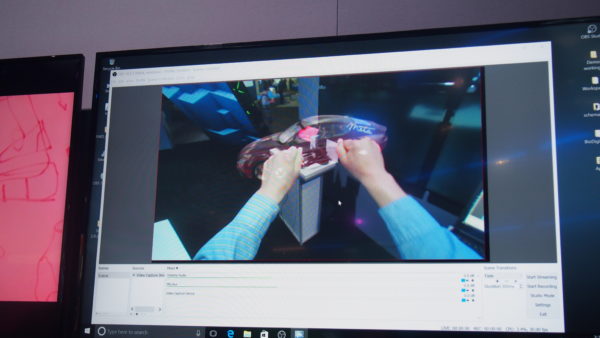

During my tests, I could move a sample car in all axes with one hand, and registering my other hand allowed me to rotate the car in all directions and zoom in and out of the 3D drawing.

I could imagine the solution becoming even more complete if the device supports eye tracking. One can then navigate drop down menus to add and remove elements from the render at the blink of an eye, literally.

Overall, I am very impressed by what the Meta 2 had to offer. The interactivity and realism of the holograms can truly transform the way products are prototyped before production, or in the way firefighters and doctors are trained, for instance.

To get there, however, it would be nice if the screen is sharper so the renders can be life-like and every detail open to scrutiny. As it is, the car still looks very much like a render and not enough of a physical product.

Interoperability and software support will likely be an issue for a while too. The totems and dials on the Dell Canvas and Microsoft Surface Studio do not have full support within the Adobe Creative Suite at the moment, for instance.

It will probably be a while more before we have the kind of equipment that can speak the same language and go beyond malleable renders to facilitating seamless, team-based collaboration in real-time.

But I am sure it is just a matter of time where the technology can mature to an extent where all these issues can be overcome.

After mixed format TV shows and augmented tours, it seems VR and AR technologies are on the cusp of discovering their killer use case, and I look forward to the possibilities it has to offer.