A team of researchers from the National University of Singapore (NUS) recently developed an artificial brain and skin that give robots a sense of touch to let them better handle tasks such as grasping a bottle or, in future, feeding a person.

The breakthrough in artificial intelligence (AI), announced a fortnight ago, came from experts in the university’s computing and materials science departments.

The team developed an artificial brain that mimics biological neural networks, which can be run on neuromorphic processors, such as Intel’s Loihi, for example.

This helps give a robot the senses of touch and vision by analysing what it is grasping with its robotic arms or seeing through its camera.

In particular, for the sense of touch, the NUS team applied an artificial skin that can detect touches more than 1,000 times faster than the human sensory nervous system. It can also identify the shape, texture and hardness of objects 10 times faster than the blink of an eye.

Together, the cross-disciplinary research is expected to help a robot “feel” its grip on a cup, for example, so it does not let it slip out of its grasp. It can also read Braille by converting the micro bumps felt by its hand into a semantic meaning.

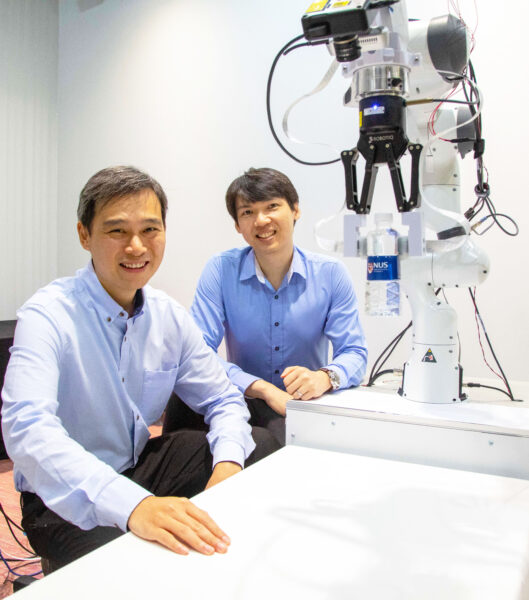

In this month’s Q&A, we speak with two NUS professors who led the team that produced the breakthrough.

Assistant Professor Harold Soh from the School of Computing and Assistant Professor Benjamin Tee from the Faculty of Engineering and Institute for Health Innovation & Technology say that smarter machines of the future need to learn more through their interaction with humans, despite the technology advancements.

Q: We’ve been told over the years that AI is still a long way from achieving the intelligence of the human brain. How does neuromorphic computing mimic the way the brain works and how far is AI from being as smart as a human?

Asst Prof Soh: Unlike standard computers, neuromorphic computing is event-based and asynchronous; information is processed using discrete events called spikes.

This allows neuromorphic sensors like our NeuTouch and neuromorphic processors like the Intel’s Loihi to be more efficient for certain kinds of computation.

While I think neuromorphic computing is exciting and has great potential, it won’t by itself make AI as smart as a human. There are many challenges ahead.

Right now, we have “narrow AI” which is very good at a limited number of tasks (usually just one) in constrained scenarios. For example, an AI that plays Go won’t be able to drive your car and vice versa.

Even an AI that’s plays Go generally won’t be able to play any other game or even Go with the rules slightly changed. This is very different from “general intelligence” that humans possess.

We are good at numerous tasks and can adapt quickly to the demands of the situation. We understand context. And can selectively apply our knowledge to solve new problems. Building an AI with these capabilities remains an important open challenge.

Q: Is computing power the main driver for smarter machines of the future?

Asst Prof Soh: Computing power is certainly an important ingredient. So is data. But we also need clever algorithms that are able to make sense of that data, that is, to transform it into useful information. On top of that, we need ways to represent that information so that it can be used for reasoning and goal achievement.

I also believe machines will need to physically interact with the world to better learn from it, which means advances in actuation and robotics are important. There are many crucial pieces that go into building smarter machines.

Q: How similar is this artificial skin compared to a human hand when it comes to the sense of touch?

Asst Prof Tee: The skin responds to touch very similar to how human skin senses touch. There are thousands of touch sensors in the human finger tip that is constantly detecting tactile contact.

Our artificial skin has the same ability to transmit the tactile contact information simultaneously across all the sensor area. This is enabled by our Asynchronous Coded Electronic Skin (ACES) technology.

Q: How does the sense of touch build trust between machines and people, for example, when robots are one day caregivers?

Asst Prof Tee: To have trust that someone will hold you without letting you fall, humans use touch. In the same way, for humans to trust robots, and for robots to understand human interactions involving physical contact, robots need to be able to feel touch that is on par with how fast and efficient humans use our skin as a touch feedback surface.

Moreover, simple tasks like pouring a cup of tea requires the robot to grasp the physical objects properly. Feeding is another activity that requires robots to have a sense of touch so the food is not spilled, for example.

At the end of the day, we can only trust robots when they can perform tasks successfully without causing any damage or harm. This requires robots to have a level of performance in skin sensing that is as good as, if not better than, humans.