In 10 years’ time, getting the computer to perform an action could just be as simple as thinking it. Though this sounds astounding like something from the pages of a science fiction novel, the wrist-based input technology was demonstrated recently by researchers at Facebook Reality Labs (FRL).

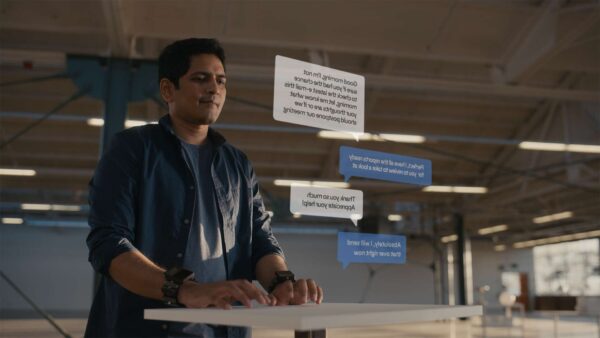

The technology combines augmented reality (AR), electromyography (EMG) and sensor-filled wrist wearables to provide a wrist computing platform. In essence, users just think about the action to perform, such as typing, swiping, or playing games in an archery simulator instead of actually moving their hands and fingers.

An EMR device translates electrical motor nerve signals into digital commands. When worn on the wrist, a user can just flick her fingers in space to control virtual inputs, whether she is wearing a augmented reality (AR) headset or interacting with the real world. A user can also “train” it, using AI, to sense the intention of her fingers, so that actions happen even when your hands are totally still.

Facebook is deep into this research because it wants to build an interface that would make it easier for people to interact with their devices and the world around them. It gave a sneak peek last week its FRL’s efforts on wrist-based computing.

In demos, it showed how an archer can pull the strings of a bow in a simulator using a wrist-worn wearable that senses nerve activity to control the hand and fingers. It can also enable users to be able to type on a virtual desktop keyboard.

Facebook chief technology officer Mike Schroepfer reckons that it could be five to 10 years before the technology becomes widely available. What the research has shown is just proof of experience.

But “turning it to something that we can make and use without tech support is much harder than people realise,” he said in a virtual media briefing last week. This is what we’re doing now to make it usable for consumers. It will take years but this is possible.”

Facebook asserts that although the wrist bands read neural signals, it is not akin to mind reading. In a recent blog, it said: “You have many thoughts and you choose to act on only some of them.”

“When that happens, your brain sends signals to your hands and fingers telling them to move in specific ways in order to perform actions like typing and swiping,” it added. “This is about decoding those signals at the wrist — the actions you’ve already decided to perform — and translating them into digital commands for your device.”

At the heart of Facebook research is always-available AR glasses as a natural and intuitive way to connect with people. To do this, there must be a ubiquitous input technology — something that anybody could use in all kinds of situations encountered throughout the course of the day, according to the Facebook blog.

“The wrist is a traditional place to wear a watch, meaning it could reasonably fit into everyday life and social contexts,” the company said. “It’s a comfortable location for all-day wear. It’s located right next to the primary instruments you use to interact with the world — your hands.”

“This proximity would allow us to bring the rich control capabilities of your hands into AR, enabling intuitive, powerful, and satisfying interaction,” stated Facebook.

Apple, Microsoft and other tech companies that are also investing heavily on the development of AR, which could be the industry’s next major battleground.

Apple Glasses could be launched at the earliest in 2022. Apple has filed many patents designed for tracking finger and hand movements to improve the capabilities of Apple Glasses.

Facebook has been working on its plans for its AR/VR technology for many years. In 2014, it bought VR start-up Oculus. Now, nearly 10,000 of its 50,000 employees work in its Reality Labs division.