First it was GPUs to optimise computer gaming, then it was GPUs to power artificial intelligence (AI) and machine learning applications.

Now, Nvidia, the US$355 billion microprocessor giant, is turning its technological prowess to help scientists better understand the behaviour of quantum computers. Its new software library called cuQuantum can dramatically speed up quantum circuit simulations running on GPUs.

Unveiled yesterday during its annual tech fest called Nvidia GTC, cuQuantum enables scientists to dramatically reduce simulation time, potentially accelerating the advancement of quantum computing research.

For the new advances, Nvidia worked with researchers at California Institute of Technology (Caltech) to generate a sample of a simulation that had been undertaken on Sycamore, a quantum processor developed by Google. In 2019, Sycamore completed a task in 200 seconds that Google claimed would have taken a state-of-the-art supercomputer 10,000 years to finish.

The Nvidia-Caltech team generated a sample of the Google Sycamore simulation in 9.3 minutes on Selene, Nvidia’s in-house AI supercomputer, a task that 18 months ago experts thought would take days using millions of CPU cores.

The San Jose-based Nvidia expects the performance gains and ease of use of cuQuantum to make it a foundational element in every quantum computing framework and simulator at the cutting edge of this research.

In a media conference hours ago, Nvidia chief executive officer Jensen Huang said while hundreds of millions of dollars have been invested in quantum computing research, the quantum computer has very limited IO (input/output) capability.

Scientists have to format the problem in such a way that quantum computers can solve it, he explained. “cuQuantum will enable researchers to do this – maybe a hybrid computer will emerge from this before (commercial) quantum computers appear.”

cuQuantum was one of several new products unveiled at GTC. The new microprocessors and software aim to accelerate large-scale AI operations in supercomputers and data centres as well as to boost the computational efficiency of visualisation and AI tools for autonomous vehicles. Early customers of these products include BMW, AWS and T-Mobile.

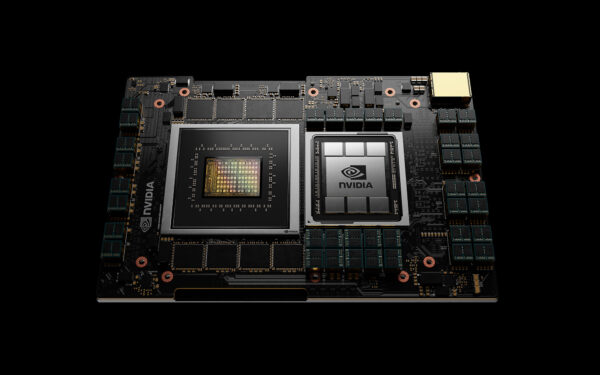

Huang also announced Nvidia Grace, the company’s first data centre CPU and an ARM-based processer. It promises to deliver 10 times the performance of today’s fastest servers on the most complex AI and high performance computing workloads.

Named after Grace Hopper, the computer scientist who pioneered programming in 1950s, it is designed for giant-scale AI and high performance computing operations such as natural language processing, recommender systems and AI supercomputing.

Grace will be able to analyse enormous datasets requiring both ultra-fast compute performance and massive memory. It combines energy-efficient Arm CPU cores with an innovative low-power memory subsystem to deliver high performance with great efficiency.

Two upcoming supercomputers at the Swiss National Supercomputing Centre and the Los Alamos National Lab in the US will also use Grace-powered superocomputers built by Hewlett Packard Enterprise which be online in 2023, according to an Nvidia media statement.

Nvidia’s other new releases and upgrades include its Jarvis Interactive Conversational AI framework, which will give developers pre-trained deep learning models and software tools to create interactive conversational AI services.

Utilising GPU acceleration, Jarvis will listen, understand and generate a response faster. It can be deployed in the cloud, in the data centre or at the edge.

It was built using models trained for several million GPU hours on over one billion pages of text, 60,000 hours of speech data, and in different languages, accents, environments and lingos to achieve world-class accuracy. American telecom operator T-Mobile has used it to provide real-time insights and recommendations.

Jarvis is trained on unsupervised data which means that it needs large volumes of data so that it keeps learning. Huang’s expectation is that every cloud vendor will need it because “language is important whether it is a search, e-commerce or social application”.

Another new release is the Nvidia Omniverse Enterprise platform, which enables design teams to collaborate in real-time across multiple software suites. Available as a subscription product, it makes it possible for 3D production teams to work simultaneously in a virtual world from anywhere, on any device.

The company also showed off updates to Nvidia Drive, a tool set for autonomous vehicle makers. Volvo Cars is using the new Nvidia Drive Orin to make its next-gen cars more programmable and perpetually upgradeable via over-the-air software updates.

Other car startups and electric vehicle (EV) brands will also be using Nvidia Drive to build software-defined vehicles to improve AI capabilities for autonomous driving. Customers include EV companies Faraday Future in the US and VinFast in Vietnam and Chinese auto makers Nio and SAIC.

Huang said the thousands of small microprocessors used in a car can be shrunk into a few large chips. “When this happens, the chips become complex but the ability to manage the supply chain improves. The Nvidia Drive Orin platform has the ability to do this.”