Brought to you by Keysight Technologies

The vision of fully autonomous vehicles is fast approaching, promising to improve the overall efficiency of transportation systems and enable greater driver and passenger safety.

However, making this vision a reality requires automotive OEMs to move beyond the current state of the Society of Automotive Engineers (SAE) levels of vehicle autonomy – from level 2+/3 to level 5.

Doing so generates a unique set of challenges for testing automotive radar sensors in autonomous drive systems and training autonomous driving algorithms that conventional solutions are ill-equipped to address.

To understand more about the test challenges at hand and the new approach needed for OEMs to achieve the next level in vehicle autonomy, we turned to Ee Huei Sin, senior vice president of Keysight Technologies, and president of Keysight’s Electronic Industrial Solutions Group, for answers.

1. What are the key challenges automotive OEMs must overcome to advance vehicle autonomy?

There are two key challenges or gaps that automotive OEMs face.

Today, sensors and control modules are tested by simulating environments with software in the loop test. Software simulation is valuable, but it cannot fully replicate the real-world and potential imperfect sensor response. Fully autonomous vehicles must know how to deal with those imperfections.

Road testing of the complete integrated system within a prototype or road-legal vehicle enables OEMs to validate the final product before bringing it to market.

While road testing is vital and a needed part of the development process, the cost, time required, and challenge of repeatability makes relying on real-world road testing alone unrealistic. Using this approach, it would take hundreds of years for vehicles to be reliable enough to navigate urban and rural roadways safely 100 per cent of the time.

- Advanced Driver Assistance Systems (ADAS)/Autonomous Vehicle (AV) algorithms to real-world conditions.

Testing automotive radar is critical to training autonomous driving algorithms. These algorithms use the data acquired by a vehicle’s radar sensors to make decisions about how the vehicle will respond in any given driving situation. When those algorithms are not properly trained, they may make unexpected decisions that undermine driver, passenger or pedestrian safety.

As an example, consider that a person is required to make many decisions while driving a car. It often takes time and experience to be a good driver.

Taking vehicle autonomy to the next level requires complex systems that exceed the abilities of the best human drivers. A combination of sensors, sophisticated algorithms, and powerful processors are key pieces that will make autonomous driving possible.

While the sensors help in sensing the immediate environment, the processors and algorithms allow for making the right decision and keeping in accordance with road rules.

Extreme confidence in new ADAS functions is critical. With an unproven system, premature roadway testing is risky. The ability to emulate real-world scenarios that enable validation of actual sensors, electronic control unit (ECU) code, artificial intelligence (AI) logic, and more is needed.

Testing more scenarios, sooner, provides OEMs a clear sense of when to stop, and when to confidently sign off on ADAS function.

2. Can conventional test solutions address these gaps?

Today’s test systems do not effectively address these gaps. Some systems use multiple radar target simulators (RTSs), each presenting point targets to radar sensors and emulating horizontal and vertical position by mechanically moving antennas around.

The mechanical automation slows overall test time. Other solutions create a wall of antennas with only a few RTSs. This means an object can appear anywhere in the scene, but not concurrently. In a static or quasi-static environment, this approach enables test with a handful of targets moving laterally at speeds that are limited by the speed of robotic arms.

Current radar sensor test solutions also have a limited field-of-view (FOV) and cannot resolve objects at distances less than 4 metres. Testing radar sensors against a limited number of objects delivers an incomplete view of driving scenarios and masks the complexity of the real world.

3. What technology innovation is needed to address these gaps?

Addressing these gaps demands a new approach to radar sensor test, one that shifts from object detection via target simulation to full traffic-scene emulation and that can be done in the lab prior to roadway test. Testing radar sensors against a limited number of targets provides an incomplete view of driving scenarios and masks the complexity of the real-world.

Full-scene emulation in the lab provides a way for OEMs to test more driving scenarios sooner with complex repeatable scenes, high density of objects (stationary or in motion), environmental characteristics, or any mix of these. The result is a significant acceleration of advanced driver assistance systems (ADAS) / autonomous vehicle (AV) learning.

4. What is Keysight Technologies doing to address these challenges?

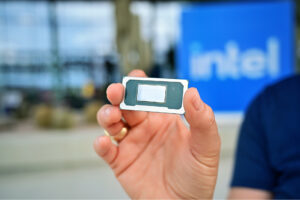

Keysight has released the Radar Scene Emulator solution for use in the lab, a first-to-market full traffic-scene emulator that combines hundreds of miniature radio frequency (RF) front ends into a scalable emulation screen representing up to 512 objects and distances as close as 1.5 metres.

The solution enables automotive OEMs to shift testing of complex driving scenarios from the road to the lab, accelerating the speed of test versus going to the test track.

5. Were any technological innovations required to bring the Radar Scene Emulator to market?

Yes. Delivering this solution required two key breakthroughs. First, Keysight developed a proprietary miniature RF front end, each with its own antenna. Second, Keysight integrated eight of those RF front ends on one circuit board. Sixty-four boards were then arranged in a semi-circular array to form the scalable emulation screen.

6. What are the key benefits of a full-scene emulation solution like Radar Scene Emulator?

The three key benefits to using Radar Scene Emulator are the ability to:

a. See the big picture. Keysight’s Radar Scene Emulator helps radar sensors see more with a wider, continuous field-of-view (FOV) (+/-70° azimuth and +/-15° elevation) and supports both near and far targets. Static and dynamic targets can be generated at ranges of 1.5m to 300m and with velocities of 0 to 400km/h.

Multi-target, multi-angle driving scenarios are addressed with angular resolution of less than 1 degree. This eliminates the gaps in a radar’s vision and enables better training of algorithms to detect and differentiate multiple objects in dense, complex scenes. As a result, autonomous vehicle decisions can be made based on the complete picture, not just what the test equipment sees.

b. Test real-world complexity. Keysight’s Radar Scene Emulator allows OEMs to emulate real-world driving scenes in the lab with every variation of environmental condition, traffic density, speed, distance, and total number of targets. Testing can be completed earlier and for common to corner case scenes, while minimising risk.

c. Accelerate learning. Keysight’s Radar Scene Emulator provides a deterministic real-world environment for lab testing complex scenes that today can only be tested on the road, if at all. Being able to test scenarios earlier, in the lab, speeds ADAS/AV algorithm learning, while eliminating inefficiencies from manual or robotic automation.

7. Does the Radar Scene Emulator work with any other Keysight solutions?

Yes. The Radar Scene Emulator is part of Keysight’s Autonomous Drive Emulation (ADE) platform. The platform was created through a multi-year collaboration between Keysight, IPG Automotive, and Nordsys.

The ADE platform exercises ADAS and AV software through the rendering of predefined use cases that apply time-synchronized inputs to the actual sensors and subsystems in a car, such as the global navigation satellite system (GNSS), vehicle to everything (V2X), camera, and radar.

As an open platform, ADE enables automotive OEMs and their partners to focus on the development and testing of ADAS systems and algorithms, including sensor fusion and decision-making algorithms.

Automotive OEMs can integrate the platform with commercial 3D modeling, hardware-in-the-loop (HIL) systems and existing test and simulation environments.

Together, the Keysight Radar Scene Emulator and Autonomous Drive Emulation platform offer automotive OEMs the ideal solution to realise new ADAS functionality on the path to full vehicle autonomy.