When a deepfake video of Taylor Swift speaking fluent Mandarin emerged last week, it raised new questions of how easily and widespread such AI-created content will become in the months and years ahead.

At the same time, some Big Tech companies have embraced similar technology. Meta, for example, is using celebrities such as Snoop Dogg to generate custom animations for its messaging services.

At least Meta says it has put in rules to prevent users from making the celebrities sprout hate speech. And for the Mandarin-speaking Taylor Swift video, the Chinese AI company behind it was upfront about the creation.

For many enterprises, AI-generated deepfakes could be both a threat and a boon, say experts, who add that it pays to tread carefully.

One obvious worry is that cyber attackers are already creating convincing videos, images, or audio recordings that deceive individuals into taking a desired action.

“Bad actors are increasingly turning to deepfake technologies to level up their social engineering scams,” said Stuart Wells, chief technology officer at Jumio, which provides identity verification technology to businesses.

“Mere seconds’ worth of source material is enough,” said Jonas Walker, director of Threat Intelligence at cybersecurity firm FortiGuard Labs.

“The more publicly-available information about a person, the easier it is to create deepfakes that are extremely difficult to verify,” he added.

For example, cybercriminals can generate fake videos of high-profile executives by feeding open-source information about these figures, such as recordings, into a large language model (LLM).

In August, Hong Kong police arrested six persons in a fraud syndicate, who had used AI deepfake technology to create doctored images for loan scams targeting banks and money lenders.

Another approach used by cyber fraudsters is to replicate voices to use in voice phishing (vishing) attacks.

In such scenarios, these fraudsters would call employees while assuming the identity of someone they trust. They manipulate the victims into transferring money, disclosing sensitive information or trading secrets.

When employees fall for such social engineering and phishing attacks, it can result in data breaches for an organisation. Besides that, deepfakes enable threat actors to make false claims and hoaxes that can undermine the reputation of organisations, said Walker.

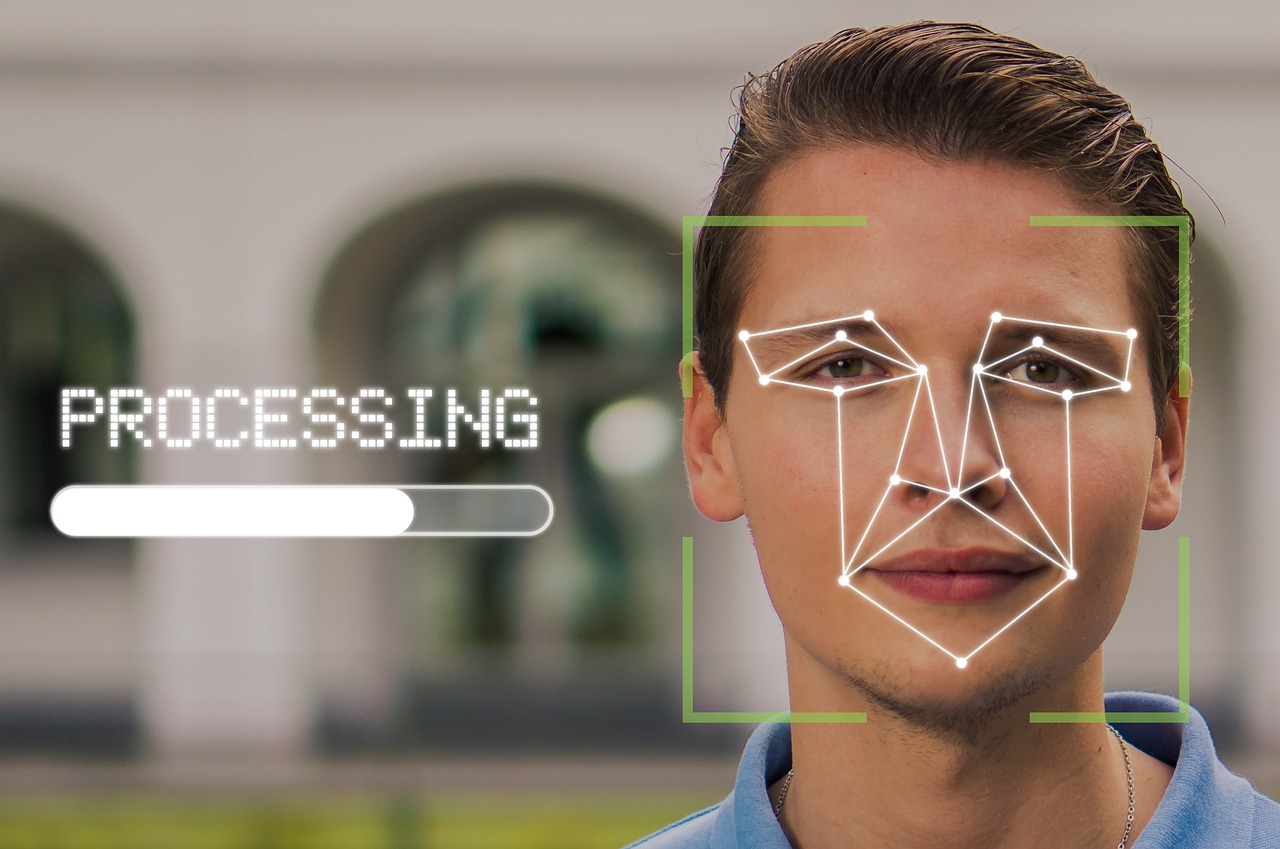

For example, an attacker can pose as a senior executive ‘admitting’ to having conducted financial or other white-collar crimes and post it on a fake social media account. They can even be used to fool security measures like facial recognition and voiceprints.

“If not handled effectively, deepfakes – like other forms of digital deception – will seriously undermine public trust in companies and societal institutions,” said Walker.

Tool for good

On the other hand, AI deepfake technology can be a tool for good.

“Businesses can employ deep fakes for their chatbots and customer service representatives,” said Walker. “These AI-driven avatars can converse with customers in a more engaging and lifelike manner, improving user and customer experiences.”

Teachers can use “deepfakes” to recreate historical figures for a more fun and interactive learning experience. Sectors like aviation, healthcare, and manufacturing can use similar “deepfake” technology for employee training and simulation.

“Simulated scenarios featuring lifelike characters can help employees develop necessary skills without real-world risks,” said Walker.

Detecting fakes

For organisations, there are some signs that users can look out for to distinguish fake figures from their real counterparts.

Deepfakes may lack eye movements and not blink, have strange facial expressions, and unnatural body shapes. They can also have inconsistent head or body positioning, often resulting in jerky movements or distorted imaging when the person moves his or her head.

However, what’s concerning is that consumers tend to overestimate their ability to spot a deepfake, when in reality, modern deepfakes have reached a level of sophistication that prevents detection by the naked eye, said Wells.

Just over half – 53 per cent – of Singapore consumers believe they can accurately detect a deepfake video, according to a Jumio study this year.

“Businesses can educate their employees on deepfake technology, its potential threats, and to approach any video content with scepticism,” said Wells.

Fostering a culture of cybersecurity awareness within an organisation will help employees to understand the importance of vigilance and compliance with security protocols, and to verify the authenticity of content.

According to Wells, technologies are available to mitigate deepfake threats, including liveness detection, to ensure that their technology is reading a true biometric source.

Organisations can also adopt multimodal biometrics, together with multimodal liveness detection. This includes supplementing facial recognition with an additional biometric like voice or iris detection.

“Techniques such as correlated mouth moment and speech, and detecting blood flow in the face collectively enhance the security of the authentication process, making it significantly more resistant to spoofing,” said Wells.