The new Intel Lunar Lake processor unveiled this week is set to be a big part of AI PCs coming out this year and it’s telling that it’s a redesigned chip just a year after another supposedly groundbreaking one by the PC chipmaker.

Not for a while has there been so much competition in the PC processor space, which has been traditionally occupied by just Intel and AMD. Qualcomm, known more for its Arm-based smartphone chips, has its Snapdragon X chips in laptops running Windows’ Copilot+ AI virtual assistant out this month.

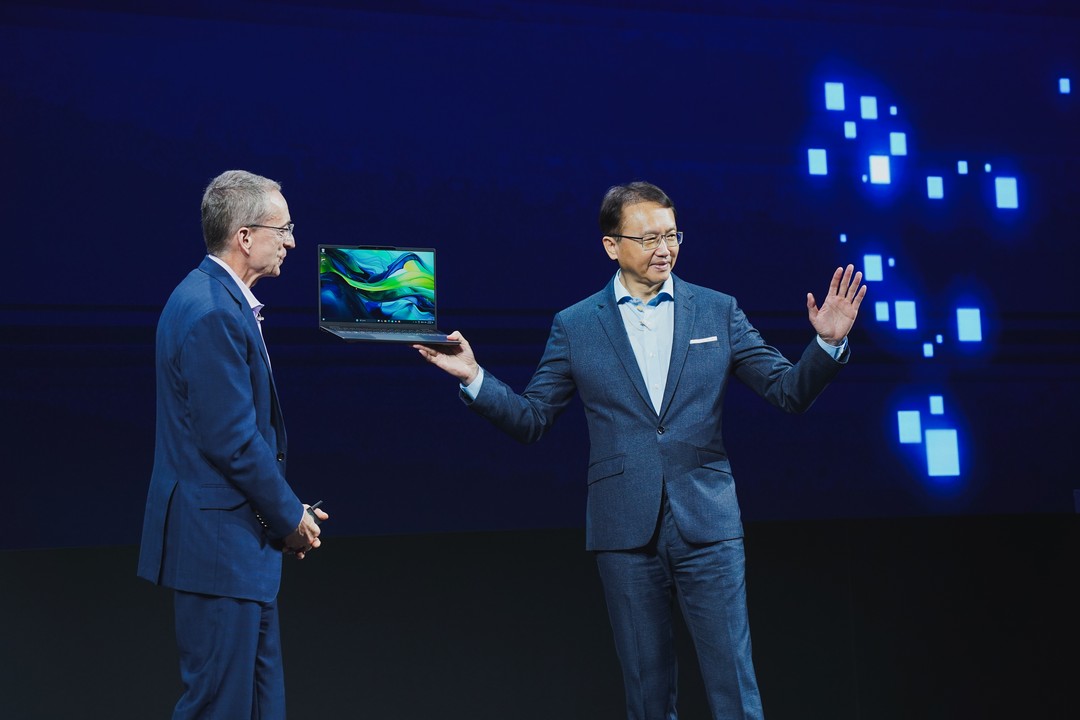

Surely, Intel, which has for so long been the mainstay in PCs, has to respond. In the Lunar Lake processor shown off at the Computex Taipei trade show this week, it at least has an answer to its rivals, even if it is a few months late.

Notably, Intel’s current Core Ultra chips, previously codenamed Meteor Lake, have just been shipping in recent months. Lunar Lake, the next generation, is expected to be out in the third quarter this year with a number of enhancements.

Chief among them is the beefing up of the neural processing unit (NPU). Often touted as the brains needed for AI PC tasks, the NPU in Lunar Lake processors now supports up to 48 tera operations pers second (TOPS).

This is more than four times the 11 TOPS on current Meteor Lake-based Intel chips. More importantly, 48 TOPS on the new Lunar Lake NPU clears the minimum 40 AI TOPS Microsoft has specified for its Copilot+ AI PCs.

In comparison, the rival AMD Ryzen AI 300 processor, also launched this week, sports a a new NPU and delivers up to 50 TOPS of AI processing power, while Qualcomm boasts up to 45 TOPS with its Hexagon NPU.

That said, Intel also stresses that the NPU isn’t everything. It’s great for more “ambient” tasks, such as training anti-malware software to spot issues or running face recognition, which should make efficient use of a laptop battery power, its executives told reporters at a pre-Computex event last week.

Just as important for AI are the CPU and graphics processing unit (GPU) in Lunar Lake processors, they say.

The CPU, which has traditionally been used for machine learning and other tasks, will offer 5 TOPS. The new Xe2 GPU supported by AI extensions will offer 67 TOPS. In total, users will get up to 120 TOPS on a laptop running the Intel Lunar Lake processor.

The CPU, Intel says, should run AI apps that work well with deep or machine learning software tools such as OpenVino, which helps accelerate Zoom, and ONNX Runtime that accelerates Microsoft Office.

The GPU, with its higher TOPS, will be handy for “bursty” AI tasks, for example, to generate text or images with an AI assistant, according to Intel.

This multi-engine way is practical in real use too. For example, a user may add new data for the CPU to digest and “learn” from, then use the GPU to generate a summary or response. All this while, he is using the NPU for speech-to-text translation when he speaks to the machine via a microphone.

Can AI inferencing be shared between the three engines – CPU, GPU and NPU? Not yet, for now, according to Tom Peterson, Intel Fellow and director of advanced graphics experience engineering solutions.

Multiple inferences, say, on the Stable Diffusion image generation tool, can be done separately by both the CPU and GPU but a single inference cannot be shared by multiple engines yet, he told reporters at a media briefing.

Another interesting improvement Intel has introduced is having memory packed into new Lunar Lake chips, likely those meant for ultraportable laptops.

This not only frees up space on a motherboard so laptop makers can design sleeker devices, but is expected to help with AI tasks as well.

Having 16GB or 32GB on the processor itself could lead to a faster initial response, for example, to get to the first word or token to an AI program, according to Intel. “No matter how many TOPS you have, you need fast access to the first word or token,” said Peterson.

Intel believes the Lunar Lake upgrade for all three engines is the right path forward, since AI developers use a variety of the them to create apps.

According to the chipmaker, 40 per cent say they develop AI agents or content creation tools that tap on the GPU, while 40 per cent intend to use the CPU and another 30 per cent or so will increasingly use the newer NPU.

And besides AI performance, there’s a lot more that Intel has packed into the new Lunar Lake processor.

The cores that process computing tasks have been redesigned. Hyperthreading is out, replaced by “simpler” but wider cores to run tasks, so they consume less power.

A Thread Director feature in the Intel chip will try to get tasks to be done in the processor’s Efficient Cores (E-Cores) before sending demanding multi-threaded workloads to the more powerful Performance Cores (P-Cores), when needed.

Despite the obvious excitement in the PC industry, however, there’s still the question of users actually taking advantage of the additional horsepower. AI PCs, hyped up by Microsoft and its partners, still has some convincing to do.

Yes, Microsoft is moving some of the AI tasks from the cloud to the PC, through this Copilot+ offering on Windows, but a lot of the tasks that have been moved to the cloud in the past decade or so, such as office productivity (think of Office 365 or Google Workspace), are still carried out online.

So, how will AI PCs fare? While new models arriving this year will drive a near-term increase in PC shipments, they are not likely to boost shipment over the long term, according to research firm IDC.

However, they will drive higher-priced PCs, it predicts, which should be a good thing for PC makers since shipments are expected to remain flat this year.