AMD is stepping up its game in the AI chip race, aiming to close the gap with market leader Nvidia. At an event in San Francisco on October 10, AMD launched new AI chips designed to power data centres and PCs for GenAI applications.

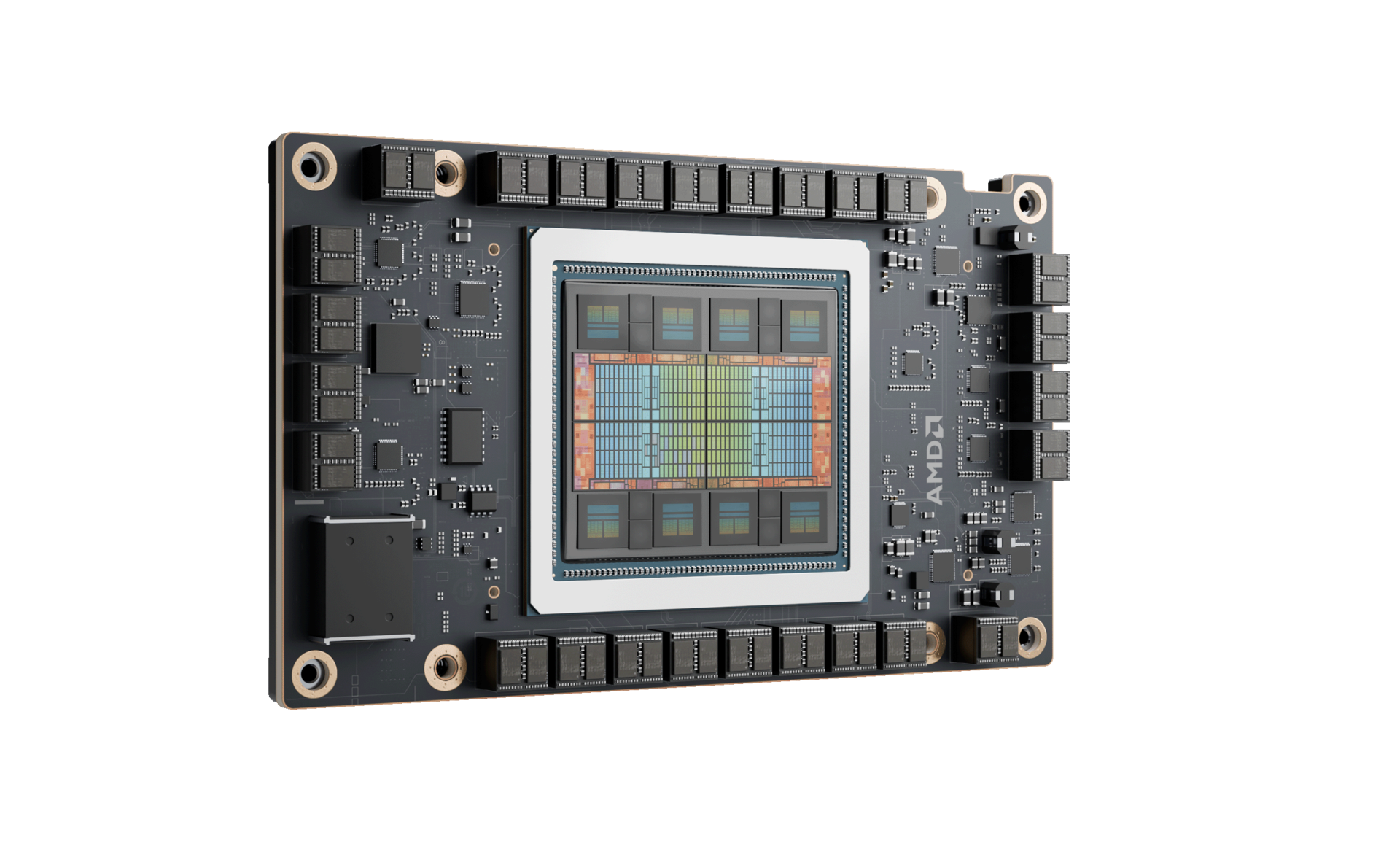

It unveiled its latest Instinct MI325X chip for data centres, claiming industry-leading performance compared to Nvidia’s H200 processors. With mass production set for late 2024, AMD is positioning itself as a serious contender in the AI space, a market projected to hit US$400 billion by 2027.

The new Instinct MI325X chip builds on the architecture of AMD’s Instinct MI300X, featuring upgraded memory for faster AI computations. But the real heavyweight is the Instinct MI350, expected to launch in 2025, which promises even more memory and a redesigned architecture for a significant performance boost.

AMD’s aggressive roadmap mirrors Nvidia’s own pace, as both chipmakers race to capture the demand for AI infrastructure.

The company’s revenue grew notably due to a triple-digit increase in data centre revenue. Data centre revenues soared to US$2.8 billion, marking an incredible 115 per cent increase compared to the previous year.

This massive increase can be attributed to the significant ramp-up in its Instinct MI300 GPU shipments and a robust rise in EPYC CPU sales, highlighting AMD’s ability to actively compete in those spaces.

CEO, Dr Lisa Su, envisions AMD becoming the “end-to-end AI leader” over the next decade. “You have to be extremely ambitious,” she said, at AMD’s media, analyst and developer conference in San Francisco last week. “This is the beginning, not the end of the AI race.”

AMD’s resurgence from the brink of bankruptcy under Su’s leadership has positioned it to become Nvidia’s closest rival. However, Nvidia’s dominance remains clear, with US$26.3 billion in AI chip sales for the quarter ending July 2024, while AMD projects US$4.5 billion for the entire year.

In addition to chips, AMD is updating its server CPU lineup, with a flagship chip offering nearly 200 cores and a 37 per cent speed boost for AI workloads.

AMD’s latest moves come as major technology players like Microsoft and Meta are eager to adopt alternative AI processors, which could help push Nvidia’s pricing down.

AMD’s key challenge remains Nvidia’s Compute Unified Device Architecture (CUDA) software, which has locked many developers into its ecosystem. To counter this, AMD is ramping up efforts with its ROCm software, making it easier for developers to transition to AMD hardware.