For more than a year since they first grabbed the headlines, AI PCs have promised a lot – mainly, faster performance for AI tasks, and particularly for workloads that you can run right on your machine so you don’t risk your privacy by going to the cloud.

At the CES show this year in Las Vegas, there were more AI PCs and AI-capable chips made to power AI PCs. Again, the message is that you’d need the added performance on your PC, whether to get a video edited or quick report summed up with the help of AI.

AMD’s new Ryzen AI Max processor, for example, comes with a powerful neural processing unit (NPU) for AI processing while also offering better performance for other workloads. Rival Intel unveiled new versions of its Core Ultra 200V chips aimed at delivering AI performance for commercial laptops.

The enthusiasm seems a little at odds with reality, though. Despite the hype, the sales of AI PCs have been lacklustre.

In the fourth quarter of 2024, computer sales across the globe only moved up about 1 per cent, whether you follow market research firm IDC or Gartner.

In other words, consumers and businesses have been lukewarm to the concept of AI PCs, perhaps unconvinced that they should run AI tasks on their own machines, when services like ChatGPT or Midjourney are available on the Web.

Or perhaps there aren’t that many compelling AI apps that clearly run better on a PC. AI, or at least GenAI, hasn’t taken long to convince users of its usefulness so one reason why people aren’t buying AI PCs to run them may be that the cloud versions are already pretty good.

What about security and privacy? Well, aren’t people already sending their credit card numbers and e-mails across the Web every day? Could AI workloads be similarly protected from prying eyes?

Perhaps one way PC makers can sell AI PCs better is to look at how games are still so popular on gaming PCs and consoles, despite the availability of cloud gaming services.

Small handheld gaming devices, instead of turning to the cloud, are packing in more processing power to run PC games like they are run on full-fledged rigs.

You can buy LG TVs now that let you play games on the screen – the heavy lifting is done on the cloud for a subscription fee – but yet, most people have stuck with PCs and game consoles that sport energy-sucking, warm-running graphics cards.

The key differences from AI PCs are the apps and content. Plus, the seamless integration of the computing that’s done locally and what’s processed remotely on the cloud (or a server farm) to deliver the gaming performance on a PC.

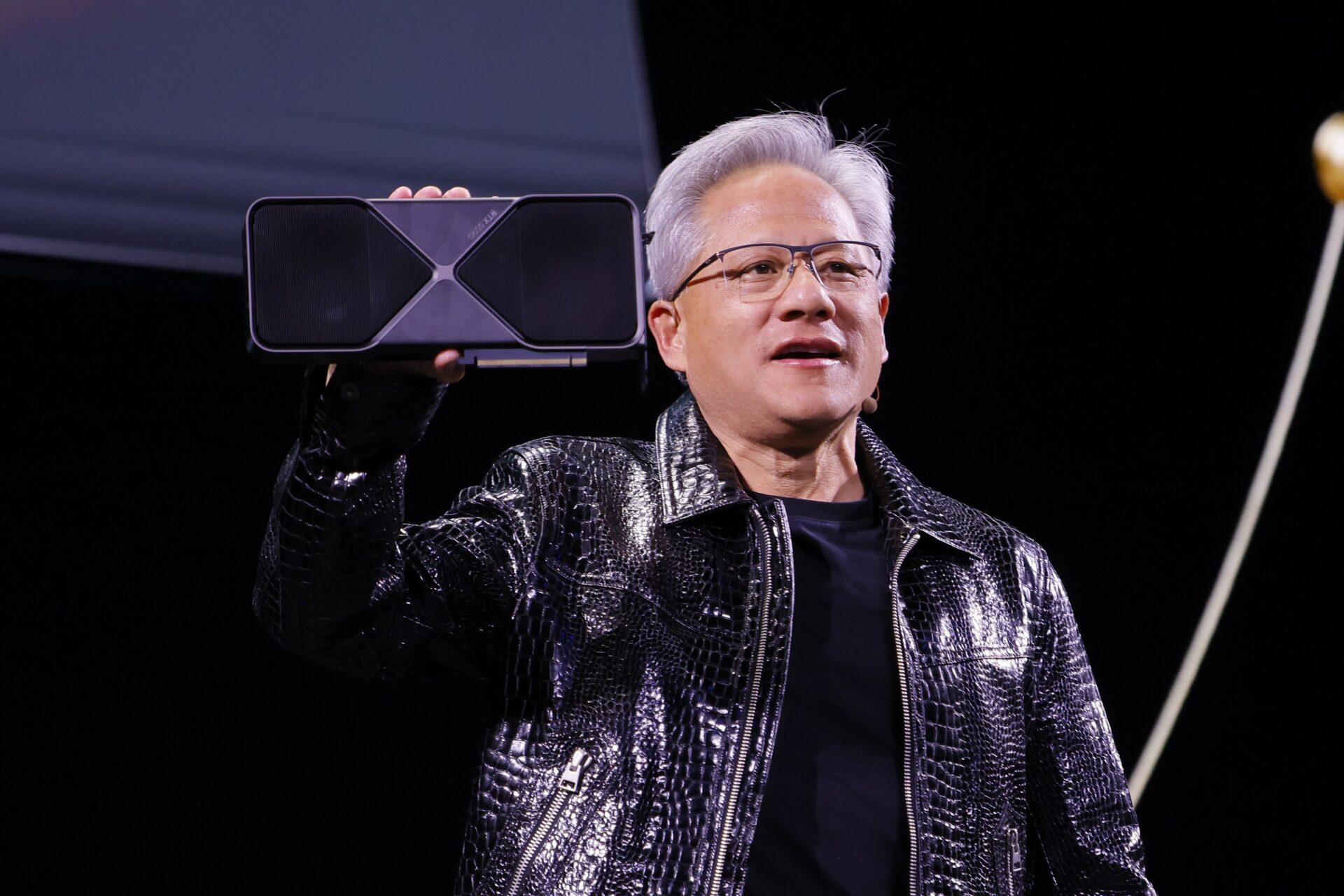

The biggest splash at CES 2025 was made by Nvidia, which unveiled its much awaited GeForce RTX 50 series of graphics cards. Game performance, it was promised, would be twice as fast as on today’s GeForce RTX 40 series.

The high performance that Nvidia has shown off is only possible with the help of what is known as “frame filling”, or as some cynically call it, fake frames.

Essentially, the fourth generation of Nvidia’s AI-powered Deep Learning Super Sampling (DLSS), only supported by the new graphics cards, will try to predict and generate up to three additional frames in a game for each frame that is rendered.

To do so, it makes use of the machine learning that Nvidia has already done on its servers, which analyse images from games and can now accurately help create these additional frames in a game without working extra hard to actually pump all of them out the old way.

The graphics card that you are buying, say, the top-end US$2,000 GeForce RTX 5090, still has to have the horsepower to do this work, despite much of the learning behind done by Nvidia on its server farms.

Older Nvidia graphics cards can only support previous versions of DLSS, which offer upscaling or single-frame generation and don’t boost quality and performance the same way.

With its new graphics cards, Nvidia is pushing the boundaries of the computing that is done “locally” on your PC. Yes, the latest GeForce RTX 50 cards are still needed for the top-notch performance but they are not pushing out frames and pixels by simply juicing up raw performance.

They also rely on AI that has been trained elsewhere, on much more powerful machines, to do the work of generating the eye-popping graphics smoothy on your PC.

These new graphics cards need to be smart enough to piece together the puzzle but the concept of piecing them together has been learned or understood on a server elsewhere.

In many ways, this is an excellent combination of getting a taxing job done on a PC and remotely as well.

And Nvidia isn’t the only one – rivals AMD and Intel offer their versions of AI-boosted performance software. They have managed to make the combination mostly seamless, though some gamers still report artifacts in some games.

Unlike gaming, however, other apps on a PC have migrated to the cloud in the past decade. The processing is done mostly remotely.

Years after moving so much online, from Microsoft Office to Adobe Lightroom, now PC makers want users to believe their interests are best served by using AI that is run on their PC hardware. That takes some convincing.

Like with Nvidia and games, regular PC apps can also take advantage of the huge AI models that make today’s AI services appear smart.

Windows’ Copilot AI companion, for example, makes use of the advances that partner OpenAI has made. Yes, it helps you create a greeting to send to a friend or make a list of talking points to bring to a meeting with a client.

However, that argument needs to be stronger for potential buyers to embrace AI PCs as the new, indispensable tools they need to get work done every day.

What’s needed are apps that run so much better with AI PCs, say, generating videos effortlessly, than on the cloud. Or perhaps in future, everyone will believe they need to train some form of AI model that suits their particular job specifically.

Until then, the saying “the network is the computer” still holds from the 1990s, when people first discovered the Internet and then created cloud computing to offload so much of today’s number crunching and data processing.

Some cloud-based services today – think of Netflix or Spotify – appear to be have cracked the code for online delivery. Others like gaming still require powerful hardware from users.

AI workloads running on AI PCs? Still very much a hard sell in 2025, unless compelling AI apps and streamlined AI performance across different hardware arrive earlier than expected.